Project: Annotation Extraction with Infrared Light

Despite ever improving digital ink and paper solutions, many people still prefer printing out documents for close reading, proofreading, or filling out forms. However, in order to incorporate paper-based annotations into digital workflows, handwritten text and markings need to be extracted. Common computer-vision and machine-learning approaches require extensive sets of training data or a clean digital version of the document. We propose a simple method for extracting handwritten annotations from laser-printed documents using multispectral imaging. While black toner absorbs infrared light, most inks are invisible in the infrared spectrum. We modified an off-the-shelf flatbed scanner by adding a switchable infrared LED to its light guide. By subtracting an infrared scan from a color scan, handwritten text and highlighting can be extracted and added to a PDF version. Initial experiments show accurate results with high quality on a test data set of 93 annotated pages. Thus, infrared scanning seems like a promising building block for integrating paper-based and digital annotation practices.

Status: ongoing

Runtime: 2020 -

Participants: Andreas Schmid, Lorenz Heckelbacher, Raphael Wimmer

Keywords: annotation extraction, scan, infrared

Background

Despite ever improving digital ink and paper solutions, many people still prefer printing out documents for close reading, proofreading, or filling out forms. When annotated documents are digitized, for example by scanning, advantages of the digital document, such as searching and copying text, get lost. In order to incorporate paper-based annotations into digital workflows, handwritten text and markings need to be extracted and inserted into the original digital document.

Status

Method

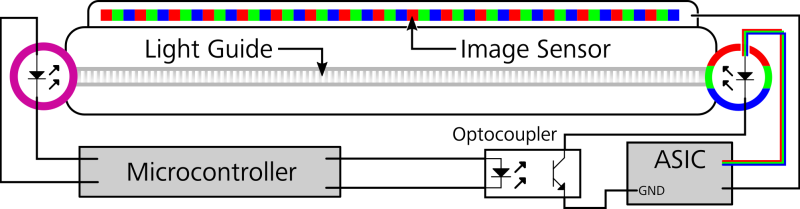

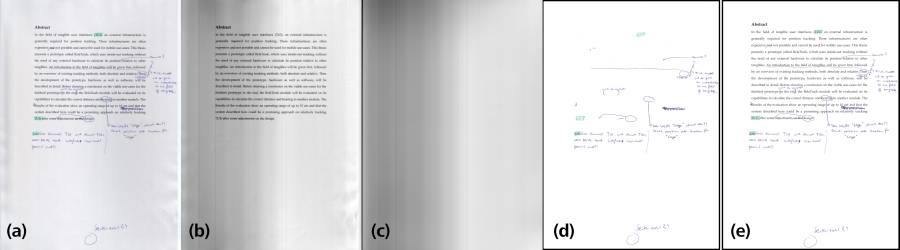

Infrared (IR) light can be used to extract handwritten annotations as many pens are not visible in the infrared spectrum. We modified an off-the-shelf flatbed scanner by adding an IR LED to its light guide at the opposite end of the RGB LED which is used to illuminate the document during the scanning process. By scanning a document in two passes - one with RGB and one with IR illumination - we get two images with pixel-perfect alignment. While the RGB image contains all color information including handwritten annotations, only black printer ink or toner are visible in the IR image. Annotations can then be extracted by creating a mask for printed text from the IR image and applying it to the RGB image.

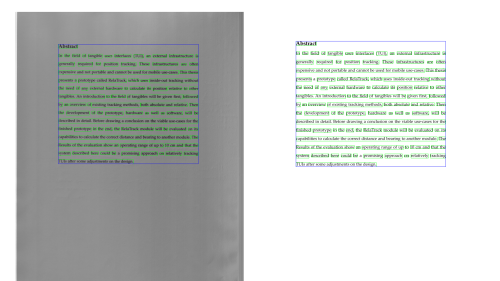

Figure 1. Handwritten annotations are not visible under infrared light.

Hardware

We modified a Canon LiDe CanoScan30 flatbed scanner by adding an IR LED (SFH 4346 with 940 nm, 20° emission angle, 90 mW/sr) to its light guide. Additionally, we added an optocoupler to the power connection of the RGB LED on the opposite side of the lightguide so both LED's can be switched on and off with a microcontroller (Wemos D32). The scanning process is initiated with a simple Python program on the connected PC which instructs the microcontroller to switch the LED's to the desired state via pyserial and controls the scanner with the python-sane library.

When the scan program is started, it switches the scanner to IR mode and performs an 8 bit grayscale scan. Afterwards it switches to RGB mode and performs an 24 bit color scan. Both scan passes are saved as PNG files. Currently, the whole process takes 73 seconds per page for scans with 300 dpi.

Image Processing

Annotation Extraction

To extract annotations, both RGB scan and IR scan have to be processed beforehand for best results. Additionally, an IR scan of the empty scanner is used as a bias image to compensate uneven illumination of the IR image. This bias image has to be captured only once.

After processing the scans, extracted annotations are saved as PNG files with a transparent background. These can be optionally inserted into a PDF document. Image preprocessing, annotation extraction, and inserting into a PDF is implemented in Python using the OpenCV 4 computer-vision library and the pdf-annotate library. Image processing takes about 80 seconds for a 10 page document and a scan resolution of 2539×3507 pixels (measured on a HP EliteBook 850 G4: Intel i7 CPU with 2.7 GHz, Intel HD Graphics 620, 16 GB RAM). As our unoptimized pipeline spends two thirds of that time with image loading and PDF generation, we see considerable room for improvement.

The following annotation extraction process is used to generate an IR image with white text and black background and an RGB image with white background and preserved colors.

As the IR scan is illuminated unevenly, the bias image is inverted and overlaid with cv2.addWeighted() using 50% alpha for both images to normalize illumination across the image. The result is then binarized with cv2.threshold() and inverted for the text to be white. We use a threshold value of 110. This value has to be tailored to the scanner's exposure - too low values lead to artifacts such as visible borders around printed text, too high values lead to bright annotations getting lost. As soft borders around printed text get lost in the binarization step, one iteration of cv2.dilate() with a 3×3 kernel is performed. White pixels are stretched vertically by shifting the whole image up and down by two pixels each and adding together the results.

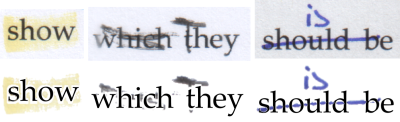

As the scanner we used captures one color at a time while moving across the document, color channels are not aligned perfectly, resulting in color fringing on the top (red) and bottom (blue) edges of letters of the RGB image. We reduce this fringing by extracting the red and blue channel of the RGB image, shifting them by one pixel in opposite vertical directions and blending them together with cv2.addWeighted().

The RGB image is then processed so that the background is plain white but the original color of annotations is preserved. To this end, the RGB image is converted to HSV color space so that the background can be masked by selecting all pixels with low saturation (less than 20) and high brightness value (more than 200) with cv2.inRange(). These pixels are then turned white.

As inline annotations such as highlighter or strikethrough overlap with printed text, they are subtracted from the processed IR image. To achieve this, regions with saturation over 100 and a brightness value between 10 and 245 are masked from a copy of the cleaned RGB image. Matching pixels are subtracted from the processed IR image to yield the final mask which is then added to the cleaned RGB image. The result contains only handwritten annotations on a plain white background. The white background is turned transparent before the image is saved as a PNG file.

Inserting Annotations into a PDF File

As an optional step after extraction, annotations can be inserted into the original PDF one page at a time as an image overlay. First, the desired PDF page is loaded as a NumPy array using the pdf2image Python library. The brightness of this page's IR scan is normalized using the bias image and its black and white points are set to minimum and maximum brightness to increase contrast.

To align PDF page and IR scan, bounding boxes around the text are calculated. Noise is removed using gaussian blur with a 9×9 kernel followed by an opening operation with a 21×21 kernel. A binarization of both images with cv2.adaptiveThreshold() removes possible remaining brightness gradients. Then, contours around individual text elements are calculated with cv2.findContours() and the minimum and maximum x and y values of those contours are used as a bounding box. Annotations are transformed into the PDF page's coordinate system by calculating a homography between both bounding boxes and using cv2.warpPerspective() on the extracted annotations.

Evaluation

Pen Comparison

As different pens have different absorption spectra, we compared scans of 42 different pens (14 sharpies, 18 felt-tip pens/highlighters, 6 pencils, 3 ballpoint pens, 2 other), as well as printed text in different colors, to determine how well they can be seen under infrared light. Only black toner, graphite pencils and some black felt-tip pens were visible. Additionally, dark board markers (blue and green) can be recognized very faintly. Indents in the paper caused by ballpoint pens can be seen in the infrared scan. As non-black printer ink and toner are also invisible under infrared light, our method for extracting annotations does not work for color prints, as colored parts would be recognized as annotations.

Real-World Data Set

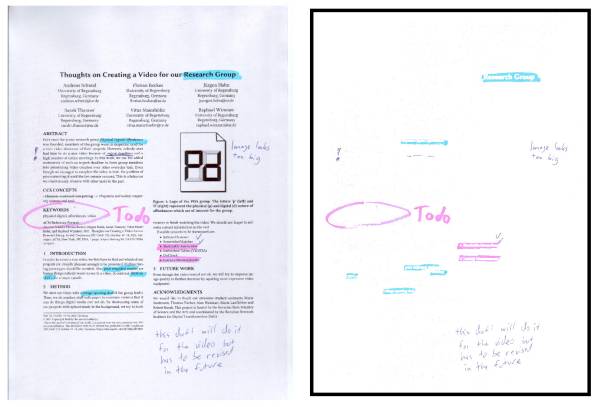

To evaluate our method for extracting annotations, we gathered a data set of annotated documents by letting ten participants (4 male, 6 female, aged 24-36) annotate ten pages of a real bachelor's thesis. Participants were asked to annotate the document as if they would proof-read a friend's thesis just before the deadline. As we knew our system does not work with color prints or pencil annotations, the document was printed in grayscale and participants could only use pens provided by us (blue and black ballpoint pen (generic brand); blue, black, red, and green sharpie (edding 89 office liner); yellow, pink, blue, and green highlighter (Stabilo Boss)).

All annotated pages were scanned with our modified scanner at 300 dpi (2539×3507 pixels) and annotations were counted and manually classified in terms of used pen, annotation type (categories: highlight, (border) note, underline, strikethrough, inline (note), arrow, other) and extraction quality (good/medium/bad). This classification was done by one person to counteract confounding effects due to different raters.

As seven pages without annotations were discarded, our data set consists of 93 annotated pages and contains a total of 1038 annotations. We considered extraction quality as good if there were no or only minor artifacts, as medium if there were some artifacts but the annotation was still recognizable, and as bad if significant parts of the annotation were missing or it was illegible. 83.6% of annotations were extracted with good quality, 13.8% with medium quality and 2.6% with bad quality. Extraction quality is impaired by annotations overlapping with printed text, as the text mask is cut out of the annotation. Therefore, the quality of strikethrough and highlight annotations was worse than for other annotation types.

Inserting annotations into the original PDF has worked for all 93 pages. In some cases, there was slight misalignment in vertical direction with a maximum offset of 11 pixels, which is about half the height of a lowercase letter. Even though all annotations in our data set were still recognizable and assignable to their correct location, this could lead to a strikethrough annotation being misrepresented as underline in the worst case.

Limitations and Future Work

Even though we have shown that infrared scans can be used to extract handwritten annotations from printed documents, our method still has some limitations. Due to their absorption spectra, our method does not work with either color prints or annotations with certain pens, such as graphite pencils.

Currently, extracted annotations are inserted into the original PDF as one image overlay per page. By implementing annotation segmentation and classification into our system, highlight, underline, and strikethrough annotations could be automatically inserted as digital annotations into the PDF. This would also solve the problem of artifacts in inline annotations due to slight misalignment or masking borders around printed text.

A further possible application of our method which we did not discuss in this paper is document cleaning. For example, handwritten annotations could be removed from scans to recover the state of the original document. It is also possible to remove certain types of stains from scanned documents, if the substance is invisible under infrared light.

Another extension of our basic method would be to augment paper documents with information printed using ink that is only visible in the infrared spectrum. This would allow for printing invisible markers on documents to augment them with machine-readable information, such as unique ID's or the position of form fields.

Publications

Andreas Schmid, Lorenz Heckelbacher, Raphael Wimmer

Extended Abstracts of the 2022 CHI Conference on Human Factors in Computing Systems

We modified a flatbed scanner by adding an infrared LED to its light guide. As the ink of most pens is invisible in the infrared spectrum, this scanner can be used to extract handwritten annotations from printed documents. (Tweet this with link)

References

Source Code: https://github.com/PDA-UR/ir-annotation-extraction

Data Set: https://files.mi.ur.de/f/68c38af378124c25b36e/?dl=1 (35 mb)